A Practical Guide to Data-Centric AI

- Samira Shaikh & Daniel Lieb

- 8 min read

At Ally, we are relentlessly focused on “Doing it Right” and being a trusted financial services provider to our private, commercial, and corporate customers. As part of this focus, we have a fierce commitment to tirelessly innovate. Our business and technical teams are built to continuously innovate and reinvent to deliver our best products and services to our customers. In this blog, we discuss how Ally exhibits its values and commitments within Conversational AI.

The financial industry, as with all sectors, has seen an increasing adoption of Artificial Intelligence (AI) models (Gartner report). The hype around AI has increased in direct proportion to the advancements made in recent years. These advancements, which notably include transformer-based models (Transformers model paper), have been touted to disrupt the landscape of learning and intelligence as we know it, with some even claiming sentience in Large Language Models. The capabilities of these models seem spectacular, particularly when we observe them generating language that is often indistinguishable from humans (e.g., GPT3 and its predecessor GPT2). Although impressive, and even with difficult benchmarks set and surpassed (notably the SQUAD benchmark in question-answering), their performance has failed to translate to the real world.

AI Models in the Lab vs. Real World

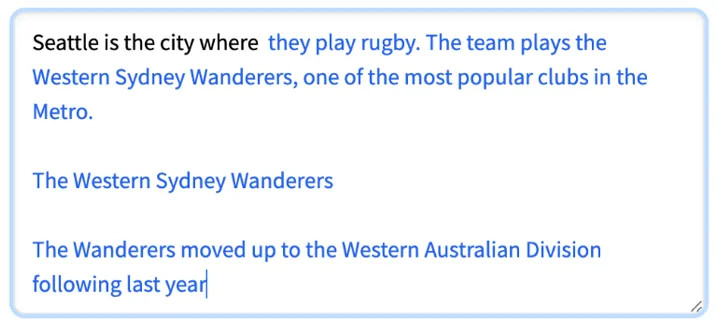

It is common across the industry to deploy models that are considered state-of-the-art in their relative task (e.g., image recognition and entity extraction), but do not perform to the same level of accuracy in the real world, where tasks may be more ambiguous. The example below is GPT-2 generated text (https://huggingface.co/gpt2) for the prompt, “Seattle is the city where”:

Note how the model generates coherent text (highlighted in blue), but the statements are completely false as the Western Sydney Wanderers are an Australian A-League soccer team, not a rugby team from Seattle. Further, they play in the National Division, not the Western Australian Division.

Recent efforts in the AI community have focused on scaling models to include ever-larger datasets, with billions of parameters to tune at the cost of many hundreds of hours of GPU time (e.g., Google’s LAMBDA model). While these efforts are commendable, in that they allow us to test the limits of large models, they come at the cost of interpretability — the models and their training data are a dense fog that cannot be navigated to understand why the models behave in the ways they do.

As illustrated by the text generation example, adding more data to the model will not address the underlying issue, and would in fact, result in the system stating even more falsehoods. Additional data simply serves to add more features, which can lead to even more spurious correlations for the model to wrongly predict from.

Reliable performance and explainability are paramount in most practical settings — a model must perform reliably and should be understandable to be effectively audited and controlled. These concerns have led to people in the industry to temper their enthusiasm for the promise of AI and instead, take a more measured approach towards realizing its full potential. This measured approach has taken various forms, the most promising of which is Data-centric AI.

Data-Centric Approach to AI

The term, Data-centric AI has been popularized most recently by Professor Andrew Ng, a notable researcher in the AI community, who co-founded Coursera and the Google Brain team. According to Dr. Ng, Data-centric AI is the “discipline of systematically engineering the data used to build an AI system” (https://www.andrewng.org/). Dr. Ng advocates for the need of engineering the data instead of engineering the model. Under the Data-centric framework, a data scientist will take the utmost care to ensure that the correct data are used to build the model, often going through many iterations of refining the data to achieve optimal performance, while the model remains mostly stable or static.

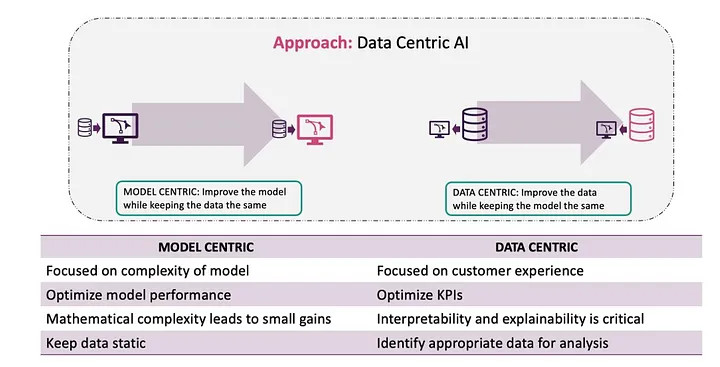

The Data-centric approach is best understood when contrasted with the Model-centric AI approach (as shown previously in Figure 2), which is the de facto approach taken by most machine learning practitioners today. Under the Model-centric framework, data are a given or obtained commodity and remain static through the model development workflow process. The model is frequently updated and tuned to achieve the best possible performance over the static dataset.

Note: Figure 2 has been adapted from https://www.modulos.ai/resources/data-centric-ai-executive-summary/

While the Model-centric approach can certainly lead to acceptable performance, there are several disadvantages:

Overly Complex — A Model-centric approach leads to more complexity. However, simplicity is key. As data scientists, we should generally seek simplicity over complexity. Often the tendency is to keep training or tuning a model that performs slightly better than the previous one. A data scientist might change the hyperparameters or add more layers to a deep neural network to improve model performance, but this increase often does not materialize in the real world. Further, a highly complex model is almost impossible to interpret, explain, or debug, adding to its complexity.

Lack of Data Exploration — A Data-centric approach leads to a thorough examination of the data, highlighting the importance of data exploration and the use of holdout sets as tollgates for model deployment. Oftentimes, data exploration and data validation are considered to be the least glamorous parts of the model development cycle, which is surprising since data are the foundation of any given model. The insights gained by a thorough examination of the data are critical to a deeper understanding of the problem domain and lead to more efficient and optimal AI solutions.

Misses Context — A Model-centric approach fails to appropriately account for the unhappy paths. All of AI is contextual. Even the best model will fail. We often see that the failure cases are not well thought through. This is true particularly when dealing with uncertainty in the model and especially true when dealing with natural language data. The inherent ambiguity of natural language requires flexibility in the model, which does not necessarily work well with the rigidity of mathematical formulations. Therefore, we need to explicitly program for the cases when the model fails and account for the customer experience in each case.

Practical Application

In this blog, we discuss how Ally exhibits its values and commitments within Conversational AI through innovation in Data-centric approaches to Artificial Intelligence. We walk through one example of how we used the framework to improve the performance of our AI-based conversational agent: the Ally Assist chatbot. The Data-centric framework was used to create a one-of-a-kind holdout set to test the performance of our chatbot before deployment.

One of the core tasks of any conversational AI system is to perform classification. A classification model predicts the customer’s intent so that the AI system responds accordingly. In a banking domain, suppose the customer’s question is “What is my current account balance?” For the bot to respond correctly, it should be able to correctly identify the customer’s intent (account balances in this case) with a high probability and avoid any of the other intents for which it has been programmed (or perhaps not programmed). To train a classification model, the model developer will use training examples of labeled data, based on a list of customer questions and their corresponding intent labels.

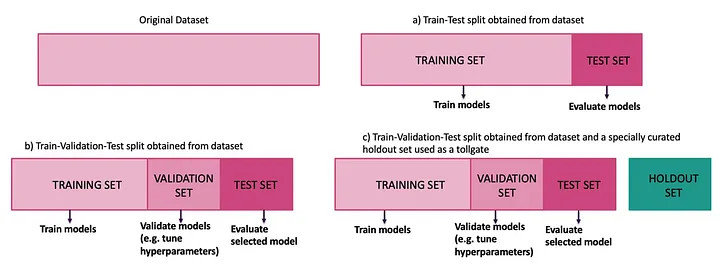

Now consider the typical AI and machine learning model datasets. One approach to develop and test models is to create a test set of randomly selected examples from the training set, often following an 80–20 split (80% of data to be used for training, and the remaining for testing). This split is shown in part (a) in Figure 3 below. Another approach, shown in part (b), is to create a train-validation-test split. The key distinction between the validation and the test set is that the model developer is allowed to iterate over the performance of the model and tune hyperparameters using the validation set. The test set is then exclusively used for the final evaluation of the model.

Note: Figure 3 is adapted from Validation Set Workflow by Google, which is licensed and used according to terms described in CC BY 4.0, and Figure 3 is licensed under CC BY 4.0.

If the training data and test data come from the same dataset (as is often the case), then it is likely that the model will perform relatively well on the test set because the test examples will be similar to those seen during model training. Performance of the model is typically measured in terms of precision, recall, and F1-scores.

It is extremely important that the dataset on which the model performance is measured matches the distribution of the real-world data. For example, if 500 customers ask the bot for their account balance, and only 20 customers ask for Ally’s routing number, then the test set should reflect this real-world fact and contain similar proportions. A second, and equally important consideration is that this set remains completely unseen by the model developer and ideally should be curated by an independent third party not privy to what examples are included in the training data.

To address these concerns, Ally has pioneered the use of holdout sets to monitor and improve our AI models. Our holdout sets continually grow with real customer interactions and satisfy two main criteria: 1) the distribution of examples in these sets follows the real-world distributions of data, and 2) these holdout sets remain truly unseen during model development processes and are not used for hyperparameter tuning or cross-validation (as shown in part c) of the above Figure 3).

The main purpose of holdout sets is to function as tollgates that measure and validate model performance before deployment. By introducing holdout datasets as tollgates, we take a Data-centric approach to addressing issues such as data drift, as opposed to a Model-centric approach to addressing model underperformance through hyperparameter tuning. If the model performs equally well on the holdout set as in the validation set from development, we know the model has been well specified. However, if the performance of the model drops, an investigation must be performed to ensure the model has not been overfit. Typically, more work is done until similar performance returns before deploying a new model.

Closing Thoughts

When building customer-facing AI systems following the Data-centric AI approach described in this article, we over-emphasize business Key Performance Indicators (KPIs), such as customer satisfaction and customer engagement, instead of measures of academic performance, such as F1-scores. In addition, simplicity is better than complexity when it comes to AI and machine learning models. Not only does simplicity help towards building models more efficiently, but it also makes models easier to interpret, fix, and improve.

Finally, Data-centric AI leads us to be vigilant of the fact that AI and machine learning models can and will fail to make accurate predictions. To ensure that customers are not negatively impacted, we increasingly account for unhappy paths, and strive to provide quality experiences for all our customers. To us as data scientists, this is what being a relentless Ally for our customers is all about.

Interested in joining Ally's team of talented technologists to make a difference for our customers and communities? Check outAlly Careersto learn more.